In 10 seconds: Artificial intelligence (AI) is revolutionizing the way we research, diagnose and treat cancer. However, it can be a "black box" that can't be explained by humans, which makes it hard to trust its output. This is a huge barrier to using it to help patients.

Does AI have a place in the cancer world? AI is already widely used in cancer research, where the complexity of living systems is too difficult for humans to understand. It can help us by processing vast amounts of information, simplifying it, and extracting something useful from it.

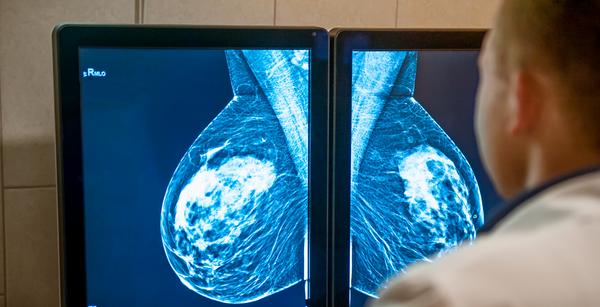

For example? In one case, an AI algorithm beat pathologists (i.e. doctors looking at cancer biopsy samples) at detecting breast cancers that had spread to the lymph nodes. Not only was it more accurate (99% for AI vs 88% for humans), but it was also nearly 2 times faster at detecting small cancerous regions. In another case, the FDA authorized the use of AI software that assists pathologists in detecting prostate cancer. This could help free up pathologists to work on more complex cases, leading to better outcomes for patients.

Can it help doctors besides pathologists? Yes! For example, a paper published in 2022 demonstrated high accuracy in detecting circulating tumor cells from blood samples of patients representing multiple different cancer types. Why is this good news? Because it could allow for more rapid diagnostic procedures: something that oncologists either cannot currently do or would be far slower to achieve.

If AI is so great, what’s the problem? First of all, we need to define what we mean by AI. For this article, we are referring to a subclass of machine learning algorithms known as “deep learning”. Deep learning itself is an umbrella term for many different structures of “neural networks”, which are multiple layers of interconnected “nodes” (in the code), inspired by the way neurons in the brain communicate. There's some fancy math going on, but essentially, the information you're interested in is applied to the first (or "input") layer and is fed through multiple "hidden" layers of nodes, ultimately reaching an output layer, which results in some sort of decision that you've asked for. However, some scientists, doctors, and engineers have described that it can be difficult to implement them to help patients because some of these algorithms are a “black box”. In other words, we have no idea how they have made the decisions they’ve made, and that’s a problem in terms of liability and accountability.

If AI does the job, why is its complexity a problem? The issue arises most frequently when AI is being used to make critical decisions, such as in a surgical setting. Researchers and doctors ask themselves: what if the way the algorithm was making its decision excluded an important subgroup of cancers or even groups of people? You could end up diagnosing incorrectly, resulting in the wrong treatment being given, and ultimately unnecessary suffering or assuming a financial burden. Consequently, it is difficult for humans to trust a decision that cannot be easily explained or interpreted.

So, when is AI OK to use? In some scenarios, the "AI black box" may not be so important, as we can judge the results with "ground truth analysis". For example, biopsies that are scored as positive for cancer by AI can be cross-checked by a pathologist. In cases like this, researchers can look at thousands of examples and check to make sure the algorithm scores them correctly, even in difficult cases. In fact, AI is currently more often used to support decisions being made by clinicians, such as in the case of making cancer diagnoses from biopsies or medical imaging techniques such as CT scans.

What can be done to help AI be more useful to patients and doctors? Efforts are underway to make explaining algorithms more straightforward. In fact, there's a whole field of study known as explainability! Its methods can be classified in multiple ways, such as “global” or “local” (whether the method understands overall structure or explains an AI decision in a single case, respectively). Another classification is “ad-hoc” vs “post-hoc” methods, where either the algorithm is designed to be more explainable from the outset (ad-hoc), or techniques are applied to the algorithm to better explain how it works after it has been constructed (post-hoc). One such post-hoc method is known as “SHAP” (Shapeley Additive Explanations). Essentially, this is a “perturbation method” where each feature used in the deep learning algorithm is removed one by one, and it then looks to see how the removal of that feature affects the predicted outcome score. It can then rank the features based on their importance; in other words, how much the feature affects the output prediction, so you can start to understand how the model is coming to its decision. Similar explainability methods have now been applied in many cancer diagnostic scenarios, and work is ongoing to make them better.

What are the next steps? Implementation of AI in the cancer world continues at a pace, especially in research, where it is much more routinely employed as more scientists get a grip on the technology. However, getting AI to help patients in the clinic for diagnostic and treatment purposes requires more time and further proof that these tools are better than the ones currently in use. Regulators such as the FDA have made strides to help the process, such as their “Artificial Intelligence and Machine Learning in Software as a Medical Device” action plan. It sets out a framework to make it easier for developers to understand how to take their ideas into the clinic whilst maintaining patient safety. Questions remain over the quality of an explanation – what even qualifies as a "good explanation"? With time and engagement of all stakeholders, from patients and doctors to regulators and the legal system, we will start to see more AI in the clinic, to the benefit of patients.

The ethical implications of AI in the medical setting

There are multiple ethical considerations when implementing AI in a clinical setting. Broadly speaking, they sit in three categories: data protection, fairness/avoiding bias, and transparency (aka explainability).

Bias is a particularly important one, as AI has shown in the past that it can make biased decisions based on the data it is given. For example, if an algorithm is trained mostly on data to aid in diagnosing older white men, it may struggle to properly diagnose other groups effectively. To avoid this, developers need to work on broadening their datasets or making more representative datasets available to other researchers.

In addition, regulators like the FDA need to include a requirement for representative datasets as part of their accreditation process. Having more explainable algorithms that can be understood by humans will also greatly help to avoid this potential bias!

Dr. Ed Law has curated 8 papers saving you 28 hours of reading time.

The Science Integrity Check of this 3-min Science Digest was performed by Dr. Talia Henkle